Software testing is an ever-evolving part of the software development lifecycle. As a result, a well-structured test plan is essential for delivering high-quality software products. A test plan acts as a roadmap for the testing process, outlining the scope, objectives, resources, schedule, and procedures that guide the testing team. It clarifies what needs to be tested, how it will be tested, and who will be involved, ensuring that the software meets its requirements and quality standards before release.

In this blog, we will explore the importance of test plans and what to include in a test plan template. We will also introduce you to 10 of the best software test plan templates that can help streamline your testing efforts.

What is a test plan?

A test plan is a comprehensive document defining a software application’s testing strategy. It serves as a blueprint, guiding the testing process from start to finish. The test plan details the scope of testing, objectives, resources required, the schedule, test cases, and methodologies to be followed.

It ensures that all stakeholders are on the same page regarding what will be tested and how it will be conducted.

The primary goals of a test plan include:

• Ensuring the software meets its functional and non-functional requirements

• Defining the scope and approach of testing

• Clarifying the roles and responsibilities of team members

• Identifying risks and outlining measures to mitigate them

Why is there a need for software test plans?

Software test plans play a crucial role in the Software Development Life Cycle (SDLC), serving as a foundation for successful project delivery. Here’s why having a structured test plan is essential:

Ensures thorough testing

A test plan ensures that every aspect of your software gets tested. Without a structured approach, it’s easy to overlook crucial components, which could lead to costly bugs down the line. A solid test plan helps you define exactly what needs testing, ensuring that no functionality or feature is left out.

Align testing with project goals

Your testing efforts should reflect the overall goals of the project. A well-defined test plan keeps your testing aligned with business objectives, whether you’re focused on delivering a flawless user experience, hitting performance benchmarks, or meeting compliance standards. It ensures that you’re testing what matters most to the success of your project.

Improves efficiency and focus

With a test plan, you and your team can work more efficiently. It outlines the tasks, timelines, and responsibilities, allowing you to focus on key areas rather than wasting time on ad-hoc testing. This clarity ensures everyone knows exactly what to do and when. This approach streamlines the entire process.

Facilitates communication and collaboration

Testing isn’t done in isolation. A test plan fosters better communication across your team by clearly outlining roles and expectations. Developers, testers, and stakeholders all have a shared understanding of the testing scope, schedule, and deliverables, reducing confusion and making collaboration smoother.

Manages resources effectively

Test plans help you allocate your resources—time, people, and tools—efficiently. You’ll know in advance what’s required for testing, allowing you to plan for the necessary hardware, software, and personnel. This way, you avoid bottlenecks and ensure that testing doesn’t hold up the overall project timeline.

Identifies and mitigates risks

One of the key advantages of having a test plan is risk management. By identifying potential risks early in the process, you can prioritize testing around those areas, making sure you address the most critical vulnerabilities before they turn into major problems. It also gives you the chance to outline backup strategies if things don’t go as expected.

Provides clear test criteria

A test plan lays down the criteria for what passes and what fails during testing. This clear definition helps eliminate ambiguity and ensures that your team and stakeholders are on the same page when it comes to quality standards. It’s much easier to make decisions when the success criteria are laid out upfront.

Facilitates tracking and reporting

Keeping track of testing progress is crucial, and a test plan makes this much easier. You’ll have clear milestones and deliverables that allow you to measure how testing is advancing. This structure also makes reporting to stakeholders simpler, giving them visibility into the progress and quality of the software.

Ensures consistency and repeatability

With a detailed test plan, you can ensure consistency in your testing efforts. The plan provides a standardized approach, making it easier to replicate tests across different stages or in future projects. This consistency helps improve the overall reliability of your testing process.

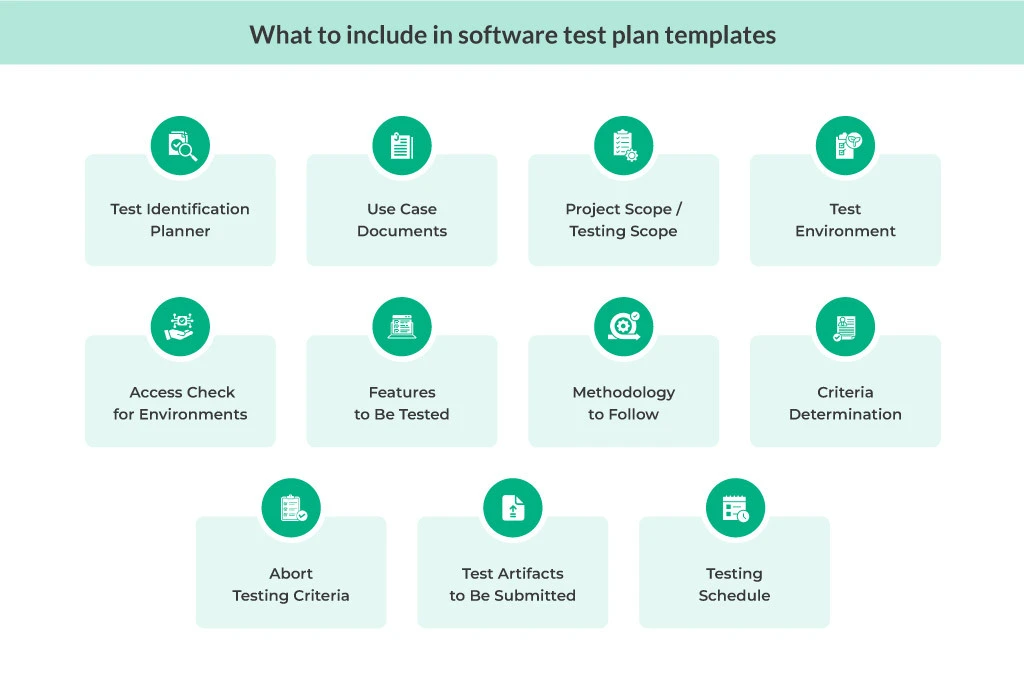

What should be included in software test plan templates?

To create a solid test plan, there are several key elements that should be included. Below is a breakdown of what to include in your software test plan templates:

To create a solid test plan, there are several key elements that should be included. Below is a breakdown of what to include in your software test plan templates:

1. Test Identification Planner

This section is where you define unique identifiers for each test case. It helps in organizing and tracking test cases throughout the testing process. Clear identification makes it easier to refer to specific tests during discussions and reporting.

2. Use Case Documents

Use case documents outline the real-world scenarios in which the software will be used. Including these in your test plan ensures that the tests are aligned with user behavior, helping to verify that the application performs well in actual use conditions.

3. Project Scope (Testing Scope)

The testing scope defines the boundaries of what will and won’t be tested. This helps set clear expectations, avoid scope creep, and focus the team’s efforts on the critical components of the software that need testing. It’s crucial to prevent any misunderstandings later in the project.

4. Test Environment

Your test plan should outline the specific hardware, software, and network configurations required to conduct tests. This includes both the physical and virtual environments where testing will take place, ensuring that conditions mimic real-world scenarios as closely as possible.

5. Access Check for Environments

Before testing begins, it’s important to ensure that all team members have the appropriate access to the testing environments. This section should confirm that permissions are set up, preventing any delays due to lack of access.

6. Features to Be Tested

List all the features and functionalities that are in scope for testing. This section should cover both the core features and any specific areas that are particularly critical to the success of the project. Clearly defining these features helps prioritize testing efforts.

7. Methodology to Follow

This section defines the overall approach to testing—whether manual, automated, or a mix of both. It also includes details on the testing techniques (e.g., black-box testing, white-box testing) that will be employed, ensuring that everyone is on the same page about how tests will be conducted.

8. Criteria Determination

Here, you’ll define the criteria for success and failure for each test case. This ensures that everyone understands what constitutes a “pass” or “fail” result, eliminating any ambiguity during the evaluation of the software’s performance.

9. Abort Testing Criteria

In some cases, testing needs to be stopped before completion—whether due to a critical bug, hardware failure, or other issues. This section outlines the conditions under which testing will be halted and the protocols for addressing these interruptions.

10. Test Artifacts to Be Submitted

After testing is complete, there are certain documents and artifacts that must be submitted. This could include test cases, test reports, defect logs, and more. Documenting these expectations in the test plan ensures that nothing is overlooked during handoffs or reporting.

11. Testing Schedule

Lastly, include a detailed schedule outlining when each phase of testing will take place, including deadlines for test case development, execution, and reporting. This helps keep the project on track and ensures that testing is completed in a timely manner.

10 Best Software Test Plan Templates

Selecting the right test plan template can significantly enhance the efficiency of your software testing process. Here, we’ll dive into 10 different software test plan templates, explaining their ideal use cases and how they can help ensure successful project outcomes.

1. Basic Test Plan Template

Ideal for small projects or teams new to testing

The basic test plan template is perfect for small projects or teams that are just getting started with testing. It provides a simple and straightforward structure that covers the fundamental aspects of a test plan, such as:

• Testing objectives

• Scope of testing

• Resources (team members, tools, etc.)

• Test schedule

• Deliverables

This template focuses on the essentials, making it easy to use without overwhelming teams. It’s ideal for startups, small-scale apps, or internal tools where testing is important but not overly complex.

Example Use: A small e-commerce site looking to test its shopping cart functionality

| Component | Description | |

| Objective | To validate core functionalities of the system (e.g., user login, shopping cart, checkout) and ensure that the features perform as per the business requirements | |

| Minimum viable testing | – User login and registration

– Shopping cart functionality – Checkout process – Basic product search |

|

| Features to be Tested |

|

|

| Test Approach |

|

|

| Test Environment | Staging environment simulating production

Web browsers: Chrome, Firefox, Safari Mobile devices for responsive testing Sandbox for payment integration |

|

| Test Schedule | Test Preparation: 1 week to develop test cases

Test Execution: 2 weeks to complete all tests Bug Fixes & Retesting: 1 week for resolving defects and performing retests |

|

| Roles & Responsibilities | QA Engineer will prepare and execute tests, log defects and update test documentation

Developers will help fix bugs based on defect reports and provide feedback Project Manager will monitor progress and ensure testing aligns with project timelines |

|

| Entry & Exit Criteria | Entry Criteria:

Exit Criteria:

|

|

| Risk Management | Risks: Potential delays in development, environmental instability, limited retesting time

Mitigation: Set up the test environment early and validate its stability. Maintain continuous communication with the development team to ensure code readiness. Allocate buffer time for retesting. |

|

| Test Deliverables & Documentation |

|

|

| Test Cases and Scripts | Test cases will be created and stored in a repository. Provide a link to the repository once the test cases are developed. | |

| Metrics & Reporting | Test Execution Progress: Track the number of test cases executed, passed, and failed.

Defect Metrics: Report open versus closed defects and classify them based on severity and priority. Reporting Frequency: Provide daily or weekly progress updates to stakeholders based on the project’s needs. |

2. Agile Test Plan Template

Tailored for Agile projects

For teams following Agile methodologies, this template is designed to align with Agile principles. Agile testing requires frequent feedback, continuous testing, and flexibility, and this template reflects that by including:

• Iterative testing cycles (aligned with sprints)

• Backlog prioritization

• Continuous integration points

• Testing during development, rather than at the end

• Daily or weekly updates to reflect changes in the project

This template emphasizes real-time collaboration and adaptability, making it highly suitable for projects where requirements evolve rapidly.

Example Use: A mobile app being developed in sprints

| Component | Description |

| Objective | To continuously test and validate features during Agile sprints, ensuring that each iteration delivers functional, tested, and integrated code that meets user stories and business requirements. |

| Features to be Tested | • Core functionality as defined by current sprint user stories (e.g., user login, product purchase, notifications)

• Integration with third-party APIs • Performance under load conditions |

| Test Approach/Strategy | Test-Driven Development (TDD, where unit tests will be written before development

Automated testing for regression and repetitive tasks Manual testing for exploratory tests and complex scenarios Continuous Integration (CI) testing after every code commit |

| Test Environment | • A staging environment is updated with the latest code after every build

• Multiple browser configurations (Chrome, Firefox, Edge) • Device simulators for mobile testing • Integrated with CI tools (Jenkins, CircleCI) to run automated tests after each code commit |

| Test Schedule | Sprint Planning: Testing tasks are planned alongside development tasks

Daily Testing: Continuous testing during the sprint Post-Sprint: Defect fixes and retesting performed after each sprint if needed, with bugs carried over to subsequent sprints if not resolved |

| Roles & Responsibilities | QA Team: Write test cases, automate tests, and conduct manual testing

Developers: Write unit tests and fix defects Scrum Master: Facilitates daily meetings, ensures communication between development and testing teams, and ensures testing is part of each sprint cycle |

| Entry Criteria | • User stories defined and ready for development

• Test data and environments are set up • Unit tests prepared |

| Exit Criteria | • All acceptance criteria for user stories met

• Critical defects fixed or logged for future sprints |

| Risk Management | Risks: Changing requirements, tight sprint deadlines, incomplete test automation

Mitigation: Close collaboration with development, frequent backlog grooming, prioritizing high-risk areas, and ensuring automated tests cover critical workflows to reduce regression issues |

| Test Deliverables | • Test Plan

• Test Cases for user stories • Automated Test Scripts • Defect Reports • Sprint-specific Test Summary Reports • Retrospective reports to improve future sprint testing |

| Test Cases and Scripts | Test cases are created for each user story, and scripts for automated testing are stored in a repository. Provide a link to the repository once it is ready |

| Metrics & Reporting | • Sprint Test Coverage: % of user stories with associated test cases

• Defect Metrics: Open/closed defects, severity, and priority of issues • Track completed user stories and corresponding tests • Regular status updates during daily stand-ups and sprint reviews |

3. Waterfall Test Plan Template

Designed for Waterfall methodologies

The Waterfall test plan template is structured around the sequential phases of the Waterfall model, where each phase of development flows into the next. In this template, testing is planned after the development phase is completed. It includes:

• Defined phases of testing (e.g., unit testing, system testing, acceptance testing)

• Strict schedules tied to project milestones

• Detailed test scripts aligned with project documentation

• Limited flexibility for changes once testing begins

This template works best for projects with well-defined requirements that are unlikely to change during the development process.

Example Use: Test plan for a desktop application with well-defined features

Objective: The primary goal of this test plan is to validate the desktop application’s functionality, performance, and usability by systematically testing its features after the development phase. The project follows the Waterfall model, ensuring thorough testing based on predefined requirements without the need for significant changes once testing begins.

Features to be Tested: We will be testing the following features of the desktop application:

• Core functionalities such as user login, file management, and settings.

• User interface and navigation across different modules.

• Integration with external libraries or APIs.

• Data handling and storage.

• Performance under typical and peak usage conditions.

Test Approach: To streamline the process, testing will be conducted in distinct phases following the completion of the development phase. These phases include:

Phase #1: Unit Testing: Validate individual components or modules for correctness.

Phase #2: Integration Testing: Ensure that different modules of the application interact correctly.

Phase #3: System Testing: Perform end-to-end testing of the entire application to verify that it meets the specified requirements.

Phase #4: Acceptance Testing: Validate the application against user requirements to confirm it is ready for deployment.

Test Environment: The test environment will simulate the production environment as closely as possible to ensure accurate results.

• Operating Systems: Windows, macOS, and Linux.

• Hardware Configurations: Various desktop configurations (RAM, processor types).

• Test Data: Use predefined datasets and user accounts for testing.

Test Schedule: The testing schedule is aligned with the project’s milestones and follows the completion of each development phase. The schedule is strictly adhered to as per the Waterfall methodology.

• Test Planning will occurs after requirement gathering and during the design phase.

• Test Execution will start after the development phase and will follow the sequence of unit testing, integration testing, system testing, and acceptance testing.

• Bug Fixing and Retesting will be conducted after each testing phase, with retesting focusing on fixed issues.

Roles & Responsibilities:

Test Lead: Oversees the test planning and execution, ensuring all phases are completed on time.

QA Engineers: Execute test cases, report defects, and perform retesting.

Developers: Address defects based on the feedback from testing.

Project Manager: Aligns testing progress with project timelines and milestones.

Stakeholders: Review acceptance testing results and provide final approval for deployment.

Entry & Exit Criteria: We will adhere to the following criteria:

• Entry Criteria:

-

- The development phase is completed.

- The test environment is set up and validated.

- All test cases and scripts are ready.

• Exit Criteria:

-

- All critical test cases have passed.

- No open high-severity defects remain.

- Test coverage meets or exceeds the expected level.

Risk Management:

• Risks:

-

- Delays in the development phase may shorten the testing timeline.

- Inconsistencies between the test and production environments.

- Changes in requirements after development may affect the test plan.

• Mitigation:

-

- Close collaboration with the development team to ensure adherence to timelines.

- Regular reviews to ensure the test environment aligns with production.

- Document and manage requirement changes carefully and adjust test cases accordingly.

Test Deliverables:

Test Plan Document [Add link] outlines the testing strategy and schedule.

Test Cases Document [Add link] covers test cases and all features and functionalities.

Defect Reports [Add link] logs of identified defects during testing phases, categorized by severity.

Test Execution Summary [Add link] has a summary of test results for each phase.

Final Test Report [Add link] offers a comprehensive report summarizing the test execution, defect status, and readiness for deployment.

Test Cases: All test cases will be detailed and stored in a central repository following the test planning phase. Test scripts for automation (where applicable) will be linked to the repository for reference and execution.

Metrics & Reporting: Regular reporting will be provided to stakeholders based on the test progress:

• Test Execution Progress: Track the number of test cases executed, passed, and failed.

• Defect Metrics: Monitor open vs. closed defects and categorize them by severity.

• Test Coverage: Ensure all features are adequately covered by test cases.

• Reporting Frequency: Weekly status reports to stakeholders, with additional reports at key project milestones.

4. Risk-Based Test Plan Template

Prioritizes test cases based on risk impact

Risk-based test plans prioritize testing efforts based on the potential risks associated with different features or components of the software. This template focuses on identifying high-risk areas and addressing them first. Key elements include:

• Risk assessment and categorization

• Test cases ranked by risk (high, medium, low)

• Strategies to mitigate risks early in testing

• Continuous monitoring of risk throughout the testing process

• This approach ensures that the most critical areas are thoroughly tested, reducing the likelihood of high-impact issues in production.

Example:

| Component | Description |

| Objective | The objective of this risk-based test plan is to ensure that the most critical areas of the software, identified by their potential risk impact, are tested first and more thoroughly. The testing strategy will focus on minimizing business and technical risks by prioritizing high-risk areas in testing efforts. |

| Risk Assessment and Categorization | Before testing begins, a detailed risk assessment will be conducted to identify high, medium, and low-risk areas of the software. Risks are assessed based on:

• The potential financial or operational impact of a feature failing in production. • The technical complexity of the feature, which may increase the likelihood of defects. • Areas with a history of defects or instability. • Critical dependency features that are integral to other systems or components. The features will then be categorised into different risk categories: • High-Risk Features: Features that are business-critical or technically complex (e.g., payment processing, data encryption). • Medium-Risk Features: Important but non-critical features (e.g., user profile management). • Low-Risk Features: Features that have little business or technical impact (e.g., cosmetic UI changes). |

| Features to be Tested | High-Risk: Payment processing, data security, user authentication, third-party API integrations

Medium-Risk: Profile management, order tracking, notifications Low-Risk: UI/UX adjustments, non-essential settings and preferences |

| Test Approach | Testing will be focused on risk-based prioritization:

High-Risk: Thorough manual and automated testing, regression testing after each build, performance and security testing, exploratory testing Medium-Risk: Functional testing, automated regression Low-Risk: Basic functionality and visual checks |

| Test Schedule | Risk Assessment: During the planning phase

High-Risk Testing: Focused on early phases of testing Medium-Risk Testing: After high-risk areas Low-Risk Testing: Conducted last or during regression |

| Roles & Responsibilities | Test Lead: Oversees risk prioritization and progress monitoring

QA Engineers: Execute test cases, prioritize high-risk areas Developers: Fix defects based on risk Product Owners: Review high-risk features |

| Entry & Exit Criteria | Entry Criteria: Risk assessment complete, high-risk test cases ready, test environment set

Exit Criteria: All high-risk test cases executed, critical defects resolved, medium/low-risk areas tested (if time permits) |

| Risk Mitigation Strategies | Mitigation for High-Risk Features: Identify high-risk areas. Ensure frequent testing during development (continuous integration). Work in close collaboration with development teams to address defects quickly.

Mitigation for Medium-Risk Features: Prioritized testing based on feature complexity. Perform regression testing after high-risk features are validated. Mitigation for Low-Risk Features:Testing deferred to later phases, with minimal impact on project timelines. |

| Metrics & Reporting | Risk-Based Coverage: % of high, medium, and low-risk features tested

Defect Metrics: Number of defects found in high-risk areas vs. medium and low-risk areas. Test Execution Progress: Number of test cases executed, passed, failed by risk level Risk Mitigation Report: Outcomes of high-risk testing, remaining risks |

5. Compliance Test Plan Template

Suitable for projects in regulated industries

In regulated industries such as healthcare, finance, or aviation, it’s crucial to meet specific compliance standards. A compliance test plan template ensures that testing covers all required regulations, standards, and best practices, including:

• Testing aligned with regulatory frameworks (e.g., HIPAA, GDPR, SOX)

• Documentation of compliance criteria

• Audit trails for all test cases and results

• Focus on security, privacy, and legal standards

This template is best for industries where failing to comply with regulations can result in legal consequences or fines.

Example Use: A healthcare application that needs to comply with HIPAA

| Component | Description |

| Objective | The Compliance Test Plan aims to ensure software adheres to industry regulations (e.g., HIPAA, GDPR) to avoid penalties and ensure security and data protection |

| Features to be Tested | • Regulatory requirements (e.g., data encryption, user consent).

• Privacy policies and data handling • Security measures (access control, audits) • Logging and reporting functionalities |

| Test Approach/Strategy | Compliance Checklist: Create test cases based on regulations

Documentation Validation: Ensure documentation meets standards Security Testing: Validate encryption and data privacy Audits: Simulate audits |

| Test Environment | Dedicated compliance test environment mimicking production with necessary configurations (e.g., secure databases)

Use security testing tools (vulnerability scanners) |

| Test Schedule | Planning: Identify requirements and create test cases (1-2 weeks)

Execution: Test core compliance features (2-4 weeks) Final Audits: Conduct final audits and sign-off (1 week) |

| Roles & Responsibilities | Compliance Officer: Ensures compliance requirements are met

QA Team: Executes tests against regulations Developers: Fix non-compliant areas Legal Team: Provides guidance on geography-specific legal matters associated with PII |

| Entry & Exit Criteria | Entry: All compliance documentation available; test environment configured

Exit: All compliance test cases pass; no high-risk violations |

| Risk Management | Risks: Non-compliance may lead to legal action

Mitigation: Regularly review standards, update test cases, and perform audits. Engage legal teams early |

| Test Deliverables | • Test Plan

• Compliance Checklist • Compliance Test Cases • Audit Logs • Test Execution Reports • Final Compliance Sign-Off Document |

| Test Cases and Scripts | Test cases validate compliance (e.g., GDPR, HIPAA). Automated scripts validate encryption and access logs. Provide a link to the test case repository |

| Metrics & Reporting | Compliance Coverage: % of requirements tested

Defect Rate: Non-compliance issues discovered Audit Log Review: % of successful validations Weekly updates to stakeholders |

6. Automated Test Plan Template

Outlines the automation strategy and tool integration

For teams using automated testing tools, this template helps streamline the process by focusing on automation strategies. Key sections include:

• Selection of automation tools (e.g., Selenium, JUnit)

• Test scripts for repetitive tasks

• Automation framework setup

• Continuous integration/continuous deployment (CI/CD) strategies

• Monitoring and reporting for automated test results

This template is ideal for projects with repetitive, time-consuming test cases that can be automated to improve efficiency and reduce manual effort.

Example:

| Component | Description |

| Objective | The Automated Test Plan aims to streamline testing by automating repetitive test cases, improving efficiency, and providing quick feedback on code changes, especially in large projects. It focuses on integrating automated tests with CI/CD pipelines |

| Features to be Tested | Stable features need frequent regression testing

Core functionalities like login and payment processing Reusable APIs and workflows User interface components under various loads |

| Test Approach/Strategy | Tool Selection: Choose automation tools (e.g., Selenium, Cypress)

Framework Development: Configure the automation framework Prioritization: Focus on high-impact test cases CI/CD Integration: Link automated tests to CI pipelines |

| Test Environment | Environment mirroring production with configured automation tools

Virtualized environments for parallel testing CI/CD infrastructure supporting automated execution (e.g., Jenkins) |

| Test Schedule | Initial Setup: Configure framework (2-3 weeks)

Test Development: Ongoing scripting of automated tests Execution: Run test suites nightly and post-code merges Updates: Review scripts during each sprint |

| Roles & Responsibilities | Automation Architect: Designs the framework

QA Engineers: Write and maintain test scripts Developers: Address defects from tests DevOps Engineer: Ensures CI/CD integration |

| Entry & Exit Criteria | Entry Criteria: Tools and frameworks set up; test scripts reviewed; CI/CD pipeline configured

Exit Criteria: All critical test cases automated and successfully executed; no major failures in the pipeline |

| Risk Management | Risks: Incomplete features may require frequent script maintenance

Mitigation: Regular updates to scripts and creation of flexible, reusable code |

| Test Cases and Scripts | Focus on automatable features (e.g., regression, smoke tests). Scripts maintained in version control (e.g., Git). Provide a link to the repository |

| Metrics & Reporting | Test Coverage: % of automated test cases

Execution Time: Duration for automated tests Defect Detection Rate: Defects found by tests CI/CD Health: % of successful test runs |

7. Exploratory Test Plan Template

Supports exploratory testing with a flexible and adaptive approach

Exploratory testing is more flexible and less structured than traditional testing methods. This template supports a free-form approach to testing, focusing on discovery, investigation, and adaptability. It includes:

• Test charters outlining what to explore

• Time-boxed sessions for spontaneous testing

• Documentation of findings in real-time

• Flexible testing goals that evolve as exploration progresses

This is a great option for early-stage projects or for finding unexpected issues in mature software where scripted tests may not cover every possible scenario.

Example Use: A new feature in a web application

Objective: The primary objective of this exploratory test plan is to uncover unexpected issues and evaluate new features in the web application. It emphasizes learning, discovery, and flexibility, allowing testers to adapt their approach based on their findings during testing.

Test Charters: Test charters define the high-level objectives for each exploratory session. Each session will focus on a specific area or feature:

• Charter Example 1: Explore the integration of the new feature with the user authentication system.

• Charter Example 2: Investigate edge cases related to form submissions in the new feature.

• Charter Example 3: Identify potential performance issues with the feature under different load conditions.

Time-Boxed Sessions: Testing will be conducted in short, time-boxed sessions, typically ranging from 60-90 minutes per session. This ensures focused testing while allowing testers to adjust their strategy based on what they discover. For example,

Session 1: Explore the feature’s core functionality and user interactions.

Session 2: Investigate how the feature interacts with other modules or components.

Session 3: Identify usability issues or areas where the user experience may be confusing.

Flexible Testing Goals: Testing goals will be flexible and evolve as exploration progresses. Initial goals may include:

• Identifying potential bugs and issues.

• Uncovering edge cases or unexpected behaviors.

• Investigating usability or performance issues.

As sessions progress, new goals may emerge based on findings and will be added.

Real-Time Documentation of Findings: Testers will document their findings in real time during each session. Key observations, issues, and potential risks will be logged immediately. This includes:

• Quick, high-level notes about observations or suspected issues.

• Screenshots or Recordings capturing visual evidence of bugs or unexpected behavior.

• Defect Logging which includes immediate logging of bugs or issues into the defect tracking system (e.g., Jira) as they are discovered.

Test Environment:

Browsers and Devices: Testing will be conducted on a variety of browsers (Chrome, Firefox, Safari) and devices (desktop, tablet, mobile) to identify platform-specific issues.

Staging Environment: The test environment will simulate production to provide accurate insights into how the feature will perform in the live system.

Test Schedule:

• Initial Testing: Focus on basic exploration of the new feature.

• Mid-Testing: Deeper exploration of any identified issues or areas of concern.

• Final Testing: Wrap-up of findings, focusing on usability and performance, and revisiting any unresolved issues.

Roles & Responsibilities:

• Testers: Execute exploratory sessions, document findings, and report defects in real-time.

• Developers: Collaborate with testers to provide quick feedback on reported issues.

• Test Lead: Organize and prioritize exploratory sessions, ensure findings are documented and reported, and adjust testing focus as needed.

Entry Criteria:

• The feature is available for testing in a staging environment.

• Test charters are defined for exploratory sessions.

• Test environment and tools are ready for use.

Exit Criteria:

• All key areas of the new feature have been explored.

• All critical bugs and issues have been documented and reported.

• Further exploration yields diminishing returns or no new findings.

Risk Management:

• Risks: The unstructured nature of exploratory testing may lead to incomplete coverage or overlooked areas.

• Mitigation: Use test charters to focus testing sessions, ensuring all critical areas are explored. Regular debriefs after sessions help to catch missed areas.

Test Deliverables:

• Documentation of findings, insights, and discoveries from each session.

• Detailed logs of issues identified during testing.

• Session Recordings or Screenshots as visual evidence of issues.

• Final Summary Report as a summary of key findings, including any unresolved issues and recommendations for further investigation.

Metrics & Reporting:

• Session Completion Metrics: Number of exploratory sessions completed, with a breakdown of findings.

• Defect Metrics: Number of issues discovered, categorized by severity and priority.

• Coverage Metrics: Areas or features explored, and whether all key components have been addressed.

8. Performance Test Plan Template

Focuses on performance testing aspects such as load, stress, and scalability

Performance testing ensures that the software can handle expected workloads and scale effectively. This template focuses on testing the system under various conditions and includes:

• Load testing scenarios

• Stress testing to determine breaking points

• Scalability and capacity planning

• Response time benchmarks

• Resource usage monitoring (CPU, memory, network)

This template is ideal for applications where performance, stability, and scalability are critical, such as high-traffic websites or enterprise systems.

Example Use: An online game that must handle high traffic

| Component | Description |

| Objective | Evaluate the application’s responsiveness, stability, scalability, and performance under various conditions to identify bottlenecks and ensure optimal user experience. |

| Features to be Tested | • Performance under different loads (normal, peak, stress)

• Response times for key transactions (login, data retrieval) • Scalability with added resources • Resource usage during peak loads |

| Test Approach/Strategy | Load Testing: Simulate concurrent user access

Stress Testing: Identify breaking points under extreme conditions Endurance Testing: Monitor long-term performance Scalability Testing: Assess performance with varying loads |

| Test Environment | • Mirror production settings closely

• Use performance testing tools (e.g., JMeter, LoadRunner) for load simulation • Implement monitoring tools for data capture |

| Test Schedule | Preparation: Set up environment (1-2 weeks)

Design: Develop test scenarios (1 week) Execution: Conduct tests (1-2 weeks) Analysis: Review findings (1 week) |

| Roles & Responsibilities | Performance Test Engineer: Design and execute tests, analyze results

Developers: Address performance issues System Administrators: Monitor the environment Project Managers: Oversee testing and report results |

| Entry & Exit Criteria | Entry: All features identified; stable test environment; tools configured

Exit: All tests executed; critical issues documented |

| Risk Management | Risks: Significant architectural changes may be needed

Mitigation: Focus on high-risk areas and conduct regular stakeholder reviews |

| Test Deliverables | • Performance Test Plan Document

• Test Scenarios and Scripts • Execution Reports • Performance Metrics • Bottleneck Analysis Report • Improvement Recommendations |

| Test Cases and Scripts | [Link to detailed test cases and scripts for performance testing] |

| Metrics & Reporting | KPIs: Response time, throughput, error rate

Load Test Reports: Summary with performance graphs Bottleneck Reports: Identify performance issues Optimization Recommendations: Suggestions for improvement |

9. User Acceptance Test (UAT) Plan Template

Ensures the software meets end-user expectations and requirements

User acceptance testing ensures that the software meets the expectations and requirements of end-users. This template focuses on testing real-world scenarios to validate that the software behaves as expected. It includes:

• Acceptance criteria based on user requirements

• Test scenarios that mimic actual user workflows

• Sign-off protocols for stakeholders

• Clear documentation of feedback and issues reported by users

This template is often used near the end of the development process to confirm that the software is ready for deployment from the user’s perspective.

Example Use: A project management tool being reviewed by potential users

| Component | Description |

| Objective | Validate that the software meets end-user requirements before deployment, ensuring it functions correctly in real-world scenarios for final stakeholder approval. |

| Features to be Tested | • Core functionalities (e.g., user login, data entry)

• Critical integration points • User interface and experience • Compliance with business rules |

| Test Approach/Strategy | • Develop user scenarios for testing

• Facilitate feedback sessions • Conduct regression testing • Establish sign-off criteria for user acceptance |

| Test Environment | • A production-like environment for testing without affecting live data

• Access to all relevant systems and tools for reporting issues |

| Test Schedule | • Preparation: Identify UAT participants (1 week)

• Design: Create user scenarios (1 week) • Execution: Conduct UAT sessions (2-3 weeks) • Analysis: Review feedback (1 week) |

| Roles & Responsibilities | • UAT Coordinator: Oversee the process and documentation.

• End Users: Execute scenarios and provide feedback. • Developers: Address reported issues. • Business Analysts: Validate outcomes against requirements. |

| Entry & Exit Criteria | Entry: All previous testing phases passed, user documentation is ready, and the UAT environment is stable

Exit: All critical issues are resolved; participants sign off on the results |

| Risk Management | Risks: User resistance, unclear requirements, inadequate testing time

Mitigation: Engage users early, provide clear instructions, and allocate sufficient testing time |

| Test Deliverables | • UAT Test Plan Document

• User Scenarios and Test Cases • Feedback Reports • Issue Logs • Final UAT Sign-off Document |

| Test Cases and Scripts | Provide a link to the repository or documentation that includes detailed user scenarios and test cases, focusing on features and workflows that end-users will validate during UAT |

| Metrics & Reporting | • Define acceptance criteria metrics (e.g., % of scenarios passed)

• Collect user feedback metrics • Track issue resolution in reports • Final UAT Report summarizing findings |

10. Integration Test Plan Template

Tests interactions between system components or modules

Integration testing ensures that different modules or components of the software work together as expected. This template focuses on testing the interactions between systems or components, and includes:

- Test cases for data flow between modules

- Interface and API testing

- Verification of end-to-end functionality

- Handling of integration errors and edge cases

This template is essential for complex systems where multiple modules or third-party integrations are involved.

Example Use: A customer relationship management (CRM) system integrating with an email service

| Component | Description |

| Objective | Ensure integrated components interact as expected, verifying data flow and module communication. |

| Features to be Tested | • Data flow between components

• API interactions • End-to-end functionality • Error handling mechanisms |

| Test Approach/Strategy | • Top-Down Integration Testing

• Bottom-Up Integration Testing • Sandwich Testing (combination of both) • Incremental Testing |

| Test Environment | Dedicated environment simulating production, including integrated systems and necessary tools for monitoring interactions |

| Test Schedule | • Preparation: Define scenarios (1 week)

• Test Design: Create test cases (1 week) • Execution: Conduct tests (2-3 weeks) • Review: Analyze results (1 week) |

| Roles & Responsibilities | • Test Lead: Coordinate efforts

• Developers: Provide components and address issues • Testers: Execute test cases • Analysts: Validate requirements |

| Entry & Exit Criteria | Entry: Components passed unit testing; environment stable; scenarios defined

Exit: Critical issues resolved; test cases executed and documented |

| Risk Management | • Risks: Miscommunication and data handling issues

• Mitigation: Requirement reviews and precise documentation |

| Test Deliverables | • Test Plan Document

• Test Cases • Issue Logs • Execution Reports • Final Report |

| Test Cases and Scripts | Provide a link to the repository or documentation, including detailed integration test cases and scripts focusing on interaction scenarios between integrated components |

| Metrics & Reporting | • Defect Density

• Test Coverage • Execution Progress • Final Report summarizing findings and readiness |

Final Thoughts

A well-structured test plan is an essential part of the software development process, providing clarity, direction, and consistency for the testing team. Whether you’re working on a small Agile project or a large Waterfall initiative, using the right test plan template can significantly improve your testing efficiency and help ensure a successful software release.

QA Touch is an efficient test management platform that can help streamline your testing efforts with pre-built templates and advanced test management features.

Book a demo to learn how we can support your testing process!